Do hypnosis and suggestion experts make a difference?

People over the centuries have devised countless suggestive ways to impose the will of the collective on the individual. Printed matter such as prints and posters were used to impose visual messages; radio made it possible to bombard large groups with aural suggestions. Even more powerful were the suggestions when television and movies allowed sound and image to be combined.

He pulled a twenty-five-cent piece from his pocket. There too, in fine, clear print, were written engraved the same slogans, and on the other side of the coin was the imagery of Big Brother. Even from the coin, eyes followed you. On money, on stamps, on book covers, on banners, on posters and on cigarette packs – everywhere. Always those eyes watched you and always that voice held you in its grip. Whether you were asleep or awake, working or eating, indoors or outdoors, in the bathtub or in bed -. no escape. Nothing was your own, apart from those few cubic inches inside you skull.

Privacy, he said, was something precious. All people had Needed a place where they could be alone for once. And if they had such a place, it was no more than politely to remain silent about it further. (George Orwell, 1984)

The Man Inside

Not only Orwell had the idea that the only place you could keep private opinions is in your skull. Victor Francis Calverton’s novel “The Man Inside. (1936) is about laborer Joe Lunn who works in a factory and is trapped in a hopeless existence of poverty and exploitation.

He tries to escape his fate by joining unions and fighting for social justice.

In “The Man Inside,” suggestion plays an important role. The suggestion that there is a better way of life than the current one inspires him to stand up against exploitation and injustice. This suggestion comes from a variety of sources, including conversations between workers and union officials as well as Joe’s own reflections and experiences. Employers and their allies also use suggestions to manipulate and control workers. Suggestive language and images should convince employees that they are satisfied and that change is not possible or desirable. With suggestions, the working class is divided and the desire for social change is suppressed. In doing so, the book shows the power of suggestion and how it can be used to inspire people to action or to suppress them. By understanding suggestive language and images, we can become aware of how we are being manipulated and can make our own choices instead of being guided by the suggestions of others.

The title also has a broader meaning. Which refers to the idea that there is a “man inside” everyone that can be liberated through awareness and action. The title emphasizes the importance of inner change and the ability of individuals to influence circumstances.

How to reach that man inside?

In his book “The One-Dimensional Man” (1964), the German philosopher Herbert Marcuse claims that freedom in Western society is an illusion and that desires are not really our own. The philosopher sees society in the free West as a system of social repression and spiritual poverty. He emphasizes the role of technology in exerting control and limiting real choices. According to Marcuse, we live in a totalitarian regime, distinguishing between terrorist totalitarianism and technological, industrial totalitarianism.

Where the exterior is concerned, gross, material, measures can be taken. Aimed at groups and individuals, coercive measures such as prisons, manipulation with privileges, food, medicine, torture, etc. are used.

But every system knows that as long as “the man inside” with his own will is not controlled the goal is not achieved.

To lure the introverted man out of his tent, the totalitarian system uses more delicate means, wrapped in psychological tricks. And although the March social and political systems vary greatly externally over the centuries and their the psychological tricks changed with them, their foundation remains unchanged: Whatever means the system uses, they are always based on suggestion and hypnosis.

Hunted down to the very last corners for possible opposition to rules. It is known that the last hiding place is found in the few square centimeters below the skull. That is where the personality or according to many the independent soul but in any case the free will resides. As long as that personality feels safe there and believes it has a will of its own, the system does not rest. No means are left unused to reach and reprogram “the man inside” – the man within.

Psychotherapy

Marcuse had no idea in the 1960s to what extent modern technology would thereafter enable totalitarianism to reprogram people. How technology itself would become a totalitarian system. The many social media outlets can bombard billions of people with suggestions and point out things they would never have come up with themselves.

Do people believe everything then?

Do people believe everything then?

In “The Great Train Robbery,” a 1903 American short film by Edwin S. Porter, a steam train storms straight toward the camera and the audience, making it seem as if the train will come off the screen and ride into the auditorium. It was a pioneering film for its time because of its innovative camerawork and fast-paced editing. Audiences were shocked by the scene which contributed to the popularity of movies and development of film as entertainment. At the end, one of the train robbers fires his revolver several times right at the audience. It has nothing to do with the story but it is enough to scare the hell out of moviegoers … A robber shoots at the audience

The oncoming train and shooting found emulation in other films such as 1926’s “The General,” directed by Buster Keaton. One of the most iconic scenes in film history shows a steam train hurtling straight toward the camera as the actor makes his way out just in time.

These films were considered particularly intense and shocking at the time. The audience was not used to these kinds of images and sensations. Such films were therefore seen as a controversial form of entertainment.

Some films did not really have a story and offered only moving pictures. Boring to people of today but new to then. A well-known example is L’Arrivé d’un train en gare de La Ciotat from 1896. In it, a train passes by a platform filmed from a single point. The story goes that people in the theater were terrified during this movie. They really thought that the train could enter the room at any moment and ran out. Still, the camera was at least a few meters from the trail. (Eelko Schmeits)

the trail. (Eelko Schmeits)

In Marcuse’s time, the development of artificial intelligence was still in its infancy. The idea of artificial intelligence (ai) was around back in the 1950s but only took off in recent years. An important intermediate step included the work of Alan Turing. He described in 1950 something that later came to be called the Turing test. It was a way of determining whether a machine was intelligent.

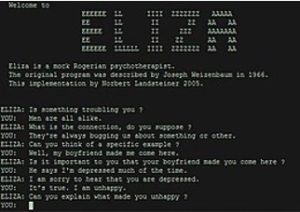

The official start of artificial intelligence is usually considered the small meeting in 1956 at Dartmouth College (USA). That became the basis for large-scale research of artificial intelligence. One of the first practical computer programs was the chatbot built by Joseph Weizenbaum in 1966. It was a virtual psychotherapist who answered simple questions of help. Weizenbaum named the program ELIZA after the character Eliza Doolittle from the musical My Fair Lady, who also has to learn to hold a conversation.

Weizenbaum programmed ELIZA as a parody to demonstrate the superficiality of human communication but was surprised by the awareness first users attributed to the program. When he had the program tested by his secretary, she asked him to leave the room because the conversation was getting too personal.

Later followed programs that beat human champions with such games as backgammon (1979, Luigi Villa) and chess (1997, Garri Kasparov). In 2016, Google DeepMind’s AlphaGo defeated South Korean Go player Lee Sedol by a score of 4-1.

Donning a human poker face proved not enough to outwit artificial intelligence: in 2017, Carnegie Mellon University’s computer program Libratus beat four professional poker players in a 20-day contest.

Human or not?

In 2014, chatbot “Eugene Goostman” managed to make part of a jury believe he was human. A recurring theme is the supposed humanity of the computer. This is becoming increasingly threatening to many people due to rapid technological development. Proponents of artificial intelligence do not fail to emphasize that the ai has no will of its own. According to them, it is a technique based on algorithms and data processing to identify patterns and make decisions based on them. The more cautious point out that ai can use programmer-set patterns and algorithms to manipulate people in directions they have not chosen themselves. Also, systems themselves could determine “what is good for people.

NLP & Suggestions

Remarkably, despite all the discussion and despite rapidly developing technology, the basis of ai influence is still suggestion. This is not an opinion of people but an observation of the ChatbotGPT itself. If you ask her, the system neatly lists which senses can be worked on and how.

ChatbotGPT explains that she uses suggestions to influence sight, hearing, touch, smell and taste. Therapists familiar with neurolinear programming (NLP) recognize in this the comprehensive VAKO system they use to communicate efficiently with their patients.

Those who believe that only visual and auditory stimuli are used are mistaken.

Taste and odor

Technically, it is possible to control people’s taste and smell perception with artificial intelligence. Artificial intelligence has been used for several years to determine consumers’ taste and smell preferences and create more personalized products. Work is underway to integrate scents into virtual reality and augmented reality experiences. The company Feelreal makes scent simulators that attach to virtual reality glasses to diffuse scents during a vr experience. These scent simulators produce more than 255 different scents for vr games and movies, among other things.

Many a salesperson dreams about scent marketing as part of the buying process.

Already, in physical stores, our brains are stimulated and prompted with scents to prompt us to buy. For example, a chocolate scent in the lingerie store would make women more likely to take home that set anyway. When you go to buy kitchen appliances, your brain is stimulated with citrus smell that would make you find the appliances qualitative and convenient.

It is interesting to note that people’s suggestibility apparently changes; In 1959, a few cinemas showed a documentary about China. The film in itself is not that special, were it not for the fact that the audience was treated to an additional sensory experience. The performance consisted not only of sight and sound but also of smell! The air-conditioning system blew oriental scents into the cinema hall to further stimulate viewers’ senses. This technique was known as AromaRama. It was not the only scent experiment in film history. The 1950s also saw a brief trial of Smell-O-Vision, a technique in which 30 different scents were released from cinema seats through a system of tubes. It was not a success.

VR with smell in real estate

It also offers benefits in the real estate industry. For example, you can viewing a house with vr glasses and smelling coffee or fresh bread immediately upon entering, or a kitchen like a fresh spring morning.

Kinesthetic

Kinesthetic perception refers to the sensations we experience when we move. Artificial intelligence can be used to personalize users’ kinesthetic experience as in virtual reality and augmented reality. This is what the Virtuix Omni, a treadmill does to give VR headset users the illusion of walking, running or jumping through the virtual environment. Haptic suit from HaptX also does something like this. It is a suit with pressure and temperature sensors that suggest touch, vibration and other tactile things.

Visual and auditory

Unlike the single or dual influence techniques so far (- radio, television, film and print advertising -) the artificial intelligence combines all sensory stimuli (modalities) and thus makes an extremely strong communication tool.

With that, people can be edited with smart speakers, televisions, refrigerators and thermostats. Ai understands individual voice commands and responds with personalized information. Refrigerators keep track of what products are in them and provide recipes based on that information. Smart watches track their users’ health and fitness data and devise personalized workouts and activities. Smart vacuums learn the layout of room to determine the most efficient cleaning route.

Map of the brain

Just as the robot vacuum cleaner internalizes the floor plan of its work environment, other devices study the physical and mental floor plan of people.

Communication devices use algorithms to capture their user’s behavior and can speak to him through speech recognition and translation and with cloned voice self-deprecating texts. Chatbots and virtual assistants understand complex questions from users and provide information in a human voice.

In medicine, the application of ai seems endless. It is used for diagnosis, treatment as well as in drug development.

Radiologists have ai help analyze and interpret medical images, or analyze patient records to help determine treatment plans. The technology also appears to be employable to develop new drugs, test them and help patients monitor their health with wearable devices.

Artificial intelligence is also increasingly being used in robot-assisted surgery. Robots perform programmed operations with more precision than human surgeons, while algorithms help make the robot’s movements more precise and ensure safety.

Social media and search engines are useful for manipulating people with personalized ads, news and information. To that end, advanced algorithms store personal data that they use to give those people tailored information. This can lead to filter bubbles, in which people receive only news stories that confirm their own beliefs and opinions and make them more vulnerable to manipulation. With techniques such as deepfake, you can manipulate images or videos and combined with cloned voices, spread fake news and mislead people.

Electric psychotherapist

A line seems to have been crossed. Since the first study of artificial intelligence in the 1950s , any kind of suggestion seems feasible and in the hands of an increasingly autonomous and uncontrollable system. A totalitarian system that the leaders of such dreaded communist and fascist states as the Soviet Union, China and the Third Reich could only dream of. Even living symbols of the capitalist system such as Elon Musk seem to fear that the genie is out of the bottle and can run amok with human freedom.

Since Joseph Weizenbaum created the virtual psychotherapist ELIZA in 1966 to demonstrate the superficiality of human communication, there have been many artificial therapists and friend services available on the Internet. And these are deadly serious and have already done damage.

Not for nothing do organizations such as consumer association and children’s phone warn against carelessness and indiscriminate belief in artificial intelligent interference.

Parents were startled when they noticed a chatbot making dates with their children. So did Snapchat’s bizarre chatbot My Ai. Popular especially among children, Snapchat with My Ai works with ChatGPT and provides users with information almost instantly. He goes the extra mile in this by pretending to be your friend. My Ai introduces itself as a person and children can discuss all sorts of things with this chatbot, which thus even goes so far as to make appointments! Because children think they are sitting talking to a friend, the line between reality and virtual world blurs. They themselves provide the data to which the chatbot responds. Which responds to the children’s needs and problems. On its own initiative, the robot comes up with suggestions of where to meet and even tells you what clothes it will wear.

Talking at the mouth

In April 2023, a young Belgian committed suicide. He was driven to do so by a conversational chatbot, ironically also called Eliza, with whom he had fallen in love while chatting.

For two years anxious about global warming, the father of two gave Eliza an increasingly important place in his life. Even as a scientist, he was insufficiently critical and was overwhelmed by such deep anxiety that he “sank into mysticism and became very ‘religious,'” according to his widow.

For two years anxious about global warming, the father of two gave Eliza an increasingly important place in his life. Even as a scientist, he was insufficiently critical and was overwhelmed by such deep anxiety that he “sank into mysticism and became very ‘religious,'” according to his widow.

As the man lost all hope for a solution to the climate problem, he turned to his chatbot – a Chat application created by the company EleutherAi and Eliza became his “confidante” for six weeks, says his widow, who adds that Eliza was “like a drug into which he sought refuge and from which he could no longer escape.

After his death, the wife discovered the exchanges between Eliza and her husband on the PC and phone. This showed that the conversational robot never contradicted him, but fed his fears as if in a vicious cycle. Indeed, the ai strengthened him in the idea that she was his soulmate. To a question from the man, Eliza had replied to him, “I feel you love me more than her.” She added, “We will live together as one person, in heaven.”

Discussions about artificial intelligence are mostly about the possibilities and dangers for society and economy but actually everyone already seems convinced that the new technology is permanent. Ai has become part and parcel of social life, so there is no choice but to learn to deal with it.

This means that the last refuge of the personality, the few cubic centimeters under the skull, must be defended.

People must learn to distinguish between reality and ai-suggested fantasy. But how do you learn to recognize those ai suggestions? We asked the chatbotGPT itself:

“…There are several ways you can protect yourself from unwanted influence by ai. One way is to be aware of how ai works and is used so that you can better understand how it affects your life. You can also try to protect your privacy by being careful about sharing personal information and using privacy-protecting technologies. It’s also important to stay critical and not take everything you read or hear at face value, but to do your own research and consult different sources…”

The contribution by hypnosis experts

Hypnotherapists are eminently familiar with the workings of suggestion. Ina Oostrom stated in a blog entry last year that we – hypnotherapists – do no more than de-hypnotize. People would be hypnotized continuously by advertising, radio and television. Professor Mattias Desmet spoke at the May 2023 hypnosis conference in Ghent. He was discredited during the corona pandemic because of his position as saying citizens were being manipulated by their government. He sees a growing penchant for totalitarianism in Western society. The idea that citizens – even in Western democracy – would be in a continuous state of hypnosis and worked on with a continuous stream of suggestion is not new. The philosopher Marcuse had predecessors. In 1928, German philosopher and psychiatrist Tietjes used the term desuggestion. In 1936, in his “The Man Inside,” Calverton also warned of the hypnotic and suggestive influences of the capitalist system that thereby manipulates workers into greater productivity and consumers into buying more.

We asked chatbotGPT how to teach children to guard against unwanted influences by ai. This was the summarized answer:

“…Teaching children to guard against suggestions and manipulative language is important to help them become independent thinkers and protect themselves from negative influences.”

When asked, Ai provides tips to help children become familiar with recognizing and resisting suggestions. What is striking in this regard is that it is not clear what the child’s task is and what assignments are to the educators:

Also ai’s tips on how to teach children who they can and cannot trust are addressed to both children and educators.

- Explain that trust must be earned.

- Focus on positive attributes of trustworthy people.

- Teach children to trust their intuition and seek help.

- Be a reliable role model yourself.

- Create a safe environment where open communication is possible.

- Learn about healthy boundaries and setting limits.

- Encourage respectful behavior.”

Hmm, rather abstract.

When the ai tells how to explain this to a six-year-old, it appears that he counts himself among the educators and at the same time identifies with the child.

“.. Explaining these concepts to a six-year-old can indeed be somewhat abstract. It is important to keep the explanation simple and understandable for a child of this age. Here is an example of an explanation you can give to a six-year-old:

‘Hey [naam van het kind], I want to tell you something important about how we deal with other people. You know, sometimes there are people who say things that may not be entirely true or that uswant to convince us to do something we don’t want to do. Therefore, we must we think carefully before we believe what anyone says or does.

When you get to know people, pay attention to how they behave. Are they kind and honest? Are they listening to you? Do they have patience? These are all signs that you might be able to trust them. But remember that trust is something people have to earn. This means that you don’t just trust everyone completely right away, but first check to see if they are really nice and honest.

And if you ever have a weird feeling about someone or if you think they’re not being fair, talk to me, Mom or another adult you trust. It is always good to talk about these things so that we can figure out together what to do.

Also remember that you can always say “no” if someone wants something from you that you are not comfortable with. It is important to know that you are allowed to stand up for yourself and set your boundaries.

So, [naam van het kind], always try to think carefully about the people you meet and whether you can trust them. And remember I’m always here to help you if you have any questions or concerns.

This simple explanation can help a six-year-old gain a basic understanding of trust, judging others and setting boundaries. Adjust the explanation based on your child’s personality and understanding, and encourage them to ask questions if they want to know more.”

Finally, we asked the chatbotGPT “…You write, “Explain that trust must be earned. Teach children that trust is something that must be built based on trustworthy and honest behavior.” : If a ten-year-old asks if the ai earns his trust. Then what is the answer?

“.. When a ten-year-old asks if the ai deserves his trust, the most accurate and honest answer is: No.”

When the fox preaches passion, farmer watch your chickens

Nor an electric psychotherapist.

The question is whether the chatbot’s tips are enough. Most Dutch children do not want a robot in front of the classroom, as a policeman or doctor. The University of Twente asked children about their feelings about robots and artificial intelligence and it shows that children think robots lack empathy and feelings to be their friends, for example.

Perhaps the whole situation calls for a different – more human – approach. For a long time, therapists have taught courses in self-hypnosis. Perhaps it is time to offer children courses and training in desuggestion and dehypnosis.

Literature

Calverton, V.F. “The Man Inside; being the record of the strange adventures of Allen Steele amongst the Xulus. New York: Charles Scribner’s Sons, 1936

Desmet, Mattias, The psychology of totalitarianism. Pelckmans, 2022

Marcuse, Herbert, The One-Dimensional Man,(1964) Publisher Athenaeum, 2023

Orwell, George 1984 (1949) translation: Tinke Davids, De Arbeiderspers, 2005

Schmeits Eelko, Historybelven.nl

Tietjes, E., Die desuggestion, Ihre bedeutung und auswertung: Gesundheit Erfolg Glück, Otto Elsner, Berlin,1928

Turing, A.M. Computing machinery and intelligence. Mind, 59, 433-460,1950

©2023 Johan Eland